Cryptocurrency Privacy Technologies: Sigma Protocol(s)

February 10, 2024 by patrickd

Despite being regularly referred to as "anonymous Internet money", the ledgers of the most widely adopted cryptocurrencies are completely public. Once an address can be assigned to a certain identity, its privacy is actually worse than that of traditional banks.

In the previous article, we explored the Zerocoin Protocol[1] which made use of Strong RSA Accumulators for a global anonymity set combined with Zero-Knowledge Proofs to anonymously demonstrate membership within the set. Unfortunately, there was a cryptographic flaw within an undocumented part of the protocol, leading to many cryptocurrencies implementing it to be vulnerable to inflation attacks. This time we'll explore its successor on Zcoin (now Firo), the "Sigma Protocol" which did not suffer from its predecessor's flaw and also promised significantly reduced proof sizes and the elimination of a trusted setup.

The Zerocoin Scheme in review

- A user chooses a random Serial Number and Blinding Factor and creates a commitment that cannot be opened without knowledge of both values.

- The user publishes a transaction that locks some amount of value (eg. 1 BTC) and contains the commitment. The chain will keep track of such commitments, effectively "minting" a Zerocoin.

- Other users too publish their own commitments and lock the same denomination of value.

- Sometime later, the user publishes the Serial Number and proves in Zero-Knowledge that they know of a Blinding Factor that will open one of the commitments - without revealing which. They "redeem" their Zerocoin in exchange for one of the locked values.

The protocol also keeps track of already used Serial Numbers to prevent double-spending since it's not known which of the commitments have already been spent. The Blinding Factor must never leak, since with it anyone could reconstruct the commitment published during the mint-transaction. The scheme is anonymous thanks to the fact that minting and redemption transactions cannot be connected to each other, effectively "mixing" with all other protocol participants.

The Concept

In cryptography, the term "Sigma Protocol" (-Protocol) commonly refers to Proof-of-Knowledge techniques where a statement is proven by a Prover and Verifier communicating in three moves: Commitment, Challenge, and Response. Classifying these protocols is useful because they can be easily composed to prove conjunctions and disjunctions of multiple statements (ie. logical AND / OR conjectures). In regards to privacy, this is especially interesting since it allows the construction of k-out-of-n OR proofs (eg. for Ring Signatures), although for large anonymity sets such general techniques are still impractical since the proof's size grows linear with the number of members. More on this can be found in the Appendix.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

Zcoin's (somewhat confusingly named) "Sigma Protocol" upgrade instead makes use of a specialized technique introduced by "One-out-of-Many Proofs: Or How to Leak a Secret and Spend a Coin" (opens in a new tab)[2] (OOOMP) which allows proving membership in large sets requiring only the transmission of a logarithmic number of commitments. Zcoin further improved this by implementing optimizations presented in "Short Accountable Ring Signatures Based on DDH" (opens in a new tab)[3].

Scheme

At a high level, Sigma still follows Zerocoin's scheme: Private coins are minted by locking some denomination of public coins and publishing a Pedersen Commitment which can only be opened with knowledge of a serial number and a random blinding factor :

As you can tell by the notation, Sigma makes use of Elliptic Curve Cryptography. This required existing commitments to be re-minted by users after the chain upgrade went live.

These commitments are no longer aggregated by an RSA Accumulator for which inclusion is proven to redeem the private coin. Instead, we prove that we are able to open one out of all published commitments where the serial number is equal to zero. To have our commit to zero, we reveal the serial number to the validators which allows them to determine the inverse element . However, to maintain anonymity we may not reveal which of the commitments belongs to us, so the validators will add it to all published commitments :

For our own , this will have the effect of homomorphically subtracting the serial number, turning it into a commitment to 0:

To a verifier all look like random points on the curve. There's no way for them to identify which one no longer commits to a serial number.

Inclusion Proof

Zerocoin required 3 Zero-Knowledge Proofs to function:

- Proof of the commitment's inclusion in the anonymity set without revealing the commitment

- Proof of the ability to open a commitment without revealing the commitment or its Blinding Factor

- Proof that both of the previous proofs are referring to the same undisclosed commitment

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

Sigma achieves this within a single proof roughly by relying on both Prover and Verifier having the same ordered list of published commitments. In a sense, the proof is efficient by communicating the position of the commitment-to-zero within the list in Zero-Knowledge.

Assume that within the list of commitments () the commitment with the Serial Number of 0 is located at the index (). Let's say that, based on a seed provided by the Prover and the commitment's place within the list, all commitments are summed up with deterministic random factors on the side of the Validator:

The Prover creates a second sum with factors that were chosen in such a manner that when subtracted from the first, everything will cancel out but for the commitment that the Prover is able to open (has knowledge of for).

To prevent this from leaking the Prover adds to the sum, distorting the Blinding Factor. Knowing both and the Prover is still able to prove their ability to open the resulting commitment .

The idea is that the Validator will be none the wiser about which of the commitments the Prover was able to open since he'll only receive the already calculated sum and no information on the chosen factors. While this was a gross oversimplification of the actual technique behind OOOMP, it should still have transported the concept at a high level.

The Math

As you might have guessed, we assume the Elliptic Curve Discrete Log Problem (ECDLP) to be hard for a group of prime order with generators and where the relationship is unknown. It could be argued that the selection of these parameters may leave an opportunity to introduce trapdoors, similar to how a trusted setup could have allowed the system to be backdoored. However by using well-established elliptic curve parameters and the use of hash functions for selecting generators, this risk should be insignificant.

Sigma Protocol for commitment to 0 or 1

The One-Out-Of-Many technique extends a -Protocol where multiple commitments are proven to commit to messages with a value of either 0 or 1.

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Chooses random scalars | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Sends | Knows |

Verifiers should enforce commitments , , and to be valid points on the curve. Scalar values , , and should be . The challenge should be a binary value where the length is a security parameter.

Substitute

Substitute

Remember that all statements can be verified in parallel within 3 moves with the same challenge , effectively resulting in a logical AND conjecture. Like all -Protocols, this one too may be transformed to be non-interactive using the Fiat-Shamir heuristic.[7]

Kronecker Delta

In what follows, a function called the "Kronecker delta" will be useful for conciseness. The function takes two parameters () and returns either (when ) or (when ). The parameters are usually written as indices of the greek letter (delta):

One Out Of Many Proof Intuition

Assuming there are commitments , where is the index at which our coin-commitment is located, the anonymity set known by both the Prover and Verifiers is:

We want to construct a proof where, when each commitment is multiplied with a Kronecker delta , the sum results in a commitment to zero which we are able to open (thanks to our knowledge of ):

As described, the Kronecker delta will be 1 only when the current index is equal to the index of the commitment for which we want to prove that we are able to open it.

We'll then further break the indices down into their binary representations and where . Using this, we can substitute with the product :

This technique assumes , which will rarely be exactly the case in practice. It's recommended to simply pad the commitments by reusing the last until a valid length has been reached.

Zcoin's OOOMP implementation erroneously omitted doing this until version v0.13.8.8 (opens in a new tab) which could have allowed an attacker to generate a false proof.

Example

Assume , therefore with and the commitment for which we want to prove set membership of at (ie. ).

| ↓ / → | 1 () | 2 () | 3 () |

|---|---|---|---|

| 0 | 0 | 0 | 0 |

| 1 | 1 | 0 | 0 |

| 2 | 0 | 1 | 0 |

| 3 | 1 | 1 | 0 |

| 4 | 0 | 0 | 1 |

| = 5 | 1 | 0 | 1 |

| 6 | 0 | 1 | 1 |

| 7 | 1 | 1 | 1 |

According to the above (big-endian) binary table the values of would be , , and :

We'll now unroll the introduced equation using the example's assumptions:

If we engage in parallel -protocols (described above) to demonstrate that all values by making commitments (with and randomly chosen ), the Prover would reveal values of in the form

as part of the final move. Based on that we define

which gives us for each that the product is a polynomial of the form

where the polynomial's low order coefficients (corresponding to ) are and can be determined before receiving the challenge value .

Example

Continuing based on the assumptions made in the previous example, we determine the polynomial for each commitment :

From these we can create a table of coefficients:

| ↓ | () | () | () | () |

|---|---|---|---|---|

| 0 | 0 | 0 | ||

| 1 | 0 | |||

| 2 | 0 | 0 | 0 | |

| 3 | 0 | 0 | ||

| 4 | 0 | |||

| 5 | ||||

| 6 | 0 | 0 | ||

| 7 | 0 |

The actual value for each coefficient can be calculated by information already known by the Prover () before a challenge value was received. Note that these aren't individually transferred as part of the proof transcript, they're only locally computed by the Prover.

Determining the coefficients for all commitments of a large anonymity set may seem extremely expensive, but there are ways to do these calculations in a quite efficient manner.

The idea is that the Prover would send commitments that cancel out these low order coefficients while the high order coefficient for will guarantee that only our commitment to zero remains:

Example

Continuing with the previous example, we now determine commitments where :

We'll use everything so far to show that the lower order coefficients indeed end up cancelling out all commitments but during the subtraction of

We begin by expanding based on the polynomials we already calculated in the previous example:

Next we continue by expanding making use of the commitments determined above:

Finally, we can see that both parts cancel each other out when adding them, except for :

As in the conceptual explanation, is extended to distort the blinding factor using random values :

With this intuition in mind, you should now be able to make sense of the proof's actual construction.

One Out Of Many Proof Construction

A OOOMP demonstrates knowledge of an index and a blinding factor for a commitment to zero contained within a known set of commitments without revealing neither the commitment nor . In other words, we prove in Zero-Knowledge that we are able to open one of the commitments from a public list.

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Chooses random scalars | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Sends | Knows |

It can be seen that, unless the challenge leaked before was committed, the Prover is required to have knowledge of in order to construct a valid . And even before that, the Prover must have had knowledge of the coin's serial number so that a valid commitment exists that can be opened with alone.

Substituting and :

We've seen in the OOOMP Intuition section that and cancel each other out, only leaving

Substitute

Assuming the Serial Number has already been communicated to determine , the information required to be included in the proof transcript are commitments and scalars in the response. If we'd have ~ commitments in the current anonymity set, this means that we would have to add padding until we reach an . In this case, would suffice to hold all commitments while allowing a proper binary representation. This would require the proof to include commitments and scalar values.

While this may seem like a lot, this still offers a big improvement in comparison to the original Zerocoin protocol where an expensive double-discrete logarithm proof was necessary and very large multiplicative groups had to be chosen to ensure security, causing each individual commitment or field element in the transcript to be much bigger in comparison.

M-Ary Optimization

The authors of "Short Accountable Ring Signatures Based on DDH" (opens in a new tab)[3] introduced a few modifications to the protocol that further reduced the proof size mainly by pointing out that the proof system forms a binary tree of which one leaf is selected. Generalizing based on this observation, they make use of -ary trees (where each node has children, instead of just ), allowing to fine-tune parameters for better performance. For , their optimization reduces the number of commitments from to with little impact on the number of scalar values or computational cost.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

Practically, they no longer use a binary () representation for indices and but and respectively. Furthermore, the Prover no longer commits to a single sequence of bits, but to sequences of bits where each 's sequence is in the form (note that this is not binary either). Each of these sequences is proven to only contain a single bit of value . Finally, they use a Pedersen Commitment variant where multiple values are committed by introducing more generators .

Note that means accessing the -th bit of the -th sequence. Similar to before, , but for each sequence we additionally want the sum of bits to be equal to 1 (). A single Pedersen Commitment is used to commit to all at once.

Example

Let's assume that and , therefore . If the commitment we are able to open is at position :

Which means that we'd commit to 3 sequences of 4 bits for :

The described -Protocol then serves as the new basis for the optimized OOOMP:

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Chooses random scalars | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Sends | Knows |

For simplicity we'll assume and .

Substitute :

If :

Not equal if for any sequence there are multiple bits

For simplicity we'll assume and .

Substitute :

If :

Not equal if for any sequence there are multiple bits and if any .

The Sigma Upgrade reportedly reduced Zcoin's proof size down to ~1.5kb from ~25kb without the use of a trusted setup, providing a significant improvement for both efficiency and security.[8] Although the protocol requires a large amount of computations, these can be further optimized using multi-exponentiation, pre-computation, and batching techniques without requiring any changes to the scheme itself.

The Code

Let's take a look at the original C++ source code of Zcoin v0.14.0.5 (opens in a new tab), which was the last release before the project rebranded to Firo and shortly before the activation of the Lelantus upgrade.

Parameters

The code makes use of a secp256k1 library for its elliptic curve operations. To do so it offers GroupElement and Scalar classes for points and scalars respectively.

if(!(::Params().GetConsensus().IsTestnet())) {

unsigned char buff[32] = {0};

GroupElement base;

base.set_base_g();

base.sha256(buff);

g.generate(buff);

}

else

g = GroupElement("9216064434961179932092223867844635691966339998754536116709681652691785432045",

"33986433546870000256104618635743654523665060392313886665479090285075695067131");

//fixing n and m; N = n^m = 16,384

int n = 4;

int m = 7;Unless we're on testnet, where it makes use of a hardcoded point, is created based on the hash of the secp256k1 curve's default generator. But more interesting than that are the M-Ary tree parameters (Note that in our explanation and were switched around for consistency).

This means that the "Many" in One-Out-Of-Many-Proofs is limited to around 16 thousand commitments which matches the anonymity set size that Sigma reportedly had. However, this shouldn't be confused with the set size that a project effectively has, which depends on the amount of users participating in the system.

unsigned char buff0[32] = {0};

g.sha256(buff0);

GroupElement h0;

h0.generate(buff0);

h_.reserve(28);

h_.emplace_back(h0);

for(int i = 1; i < n*m; ++i) {

h_.push_back(GroupElement());

unsigned char buff[32] = {0};

h_[i - 1].sha256(buff);

h_[i].generate(buff);

}We also find code generating generator points . The first being based on the hash of , the following based on the hash of the previous point ().

Minting

When minting private coins, the user could choose from 7 denominations ().

void GetAllDenoms(std::vector<sigma::CoinDenomination>& denominations_out) {

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_100);

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_25);

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_10);

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_1);

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_0_5);

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_0_1);

denominations_out.push_back(CoinDenomination::SIGMA_DENOM_0_05);

}Being based on Bitcoin, Zcoin has 8 decimals with the smallest possible value of one Satoshi () for public coins. But the smallest denomination of private coins is merely causing users to end up with UTXOs of "Tainted Change" that cannot be transferred anonymously. Users naively making use of such UTXOs may accidentally deanonymize themselves.[10].

void PrivateCoin::mintCoin(const CoinDenomination denomination){

// Create a key pair

secp256k1_pubkey pubkey;

do {

if (RAND_bytes(this->ecdsaSeckey, sizeof(this->ecdsaSeckey)) != 1) {

throw ZerocoinException("Unable to generate randomness");

}

} while (!secp256k1_ec_pubkey_create(

OpenSSLContext::get_context(), &pubkey, this->ecdsaSeckey));

// Hash the public key in the group to obtain a serial number

serialNumber = serialNumberFromSerializedPublicKey(

OpenSSLContext::get_context(), &pubkey);

randomness.randomize();

GroupElement commit = SigmaPrimitives<Scalar, GroupElement>::commit(

params->get_g(), serialNumber, params->get_h0(), randomness);

publicCoin = PublicCoin(commit, denomination);

}In mintCoin() we can see how our coin's commitment is created. Like with Zerocoin, the Serial Number can't be chosen at random like since this would allow for "frontrunning" attacks where an adversary could make our coin irredeemable [11]. To prevent this, is created from a public key and when spending the coin we'll prove ownership of its private key by signing.

After publishing the commitment together with a transaction that locks an appropriate denomination of public coins, we may later spend the private coin by generating a One-Out-Of-Many-Proof.

Spend: Proof Generation

When intending to redeem a private coin, the spender needs to reveal a Serial Number such that the inverse can be calculated to homomorphically end up with a commitment to zero, which is what happens in the following code. While going through all of the commitments to apply this "subtraction", it also looks for the locked public coin that the spender has randomly selected for redemption.

//compute inverse of g^s

GroupElement gs = (params->get_g() * coinSerialNumber).inverse();

std::vector<GroupElement> C_;

C_.reserve(anonymity_set.size());

std::size_t coinIndex;

bool indexFound = false;

for (std::size_t j = 0; j < anonymity_set.size(); ++j) {

if(anonymity_set[j] == coin.getPublicCoin()){

coinIndex = j;

indexFound = true;

}

C_.emplace_back(anonymity_set[j].getValue() + gs);

}

if(!indexFound)

throw ZerocoinException("No such coin in this anonymity set");

sigmaProver.proof(C_, coinIndex, coin.getRandomness(), sigmaProof);Generation of the OOOMP starts with the creation of the commitment which holds the bits representing the index of the commitment that we want to prove inclusion of. The code stores these bits within a sigma table. The variable rB contains 's random blinding factor .

std::size_t setSize = commits.size();

assert(setSize > 0);

Exponent rB;

rB.randomize();

// Create table sigma of nxm bits.

std::vector<Exponent> sigma;

SigmaPrimitives<Exponent, GroupElement>::convert_to_sigma(l, n_, m_, sigma);

// Values of Ro_k from Figure 5.

std::vector<Exponent> Pk;

Pk.resize(m_);

for (int k = 0; k < m_; ++k) {

Pk[k].randomize();

}

R1ProofGenerator<secp_primitives::Scalar, secp_primitives::GroupElement> r1prover(g_, h_, sigma, rB, n_, m_);

proof_out.B_ = r1prover.get_B();

std::vector<Exponent> a;

r1prover.proof(a, proof_out.r1Proof_, true /*Skip generation of final response*/);The paper introducing the M-Ary optimization referred to the -Protocol, proving that and that each sequence only contains a single -bit, as relationship-proof . In the implementation, we find this part of the proof referred to as R1, which is modularized into a distinct section of the codebase that we won't dive deeper into here.

Aside from an assertion requiring the anonymity set (commits) size to be non-zero, we find the initialization of random factors used for the commitments.

Next is the computation of the polynomials' () coefficients .

// Compute coefficients of Polynomials P_I(x), for all I from [0..N].

std::size_t N = setSize;

std::vector <std::vector<Exponent>> P_i_k;

P_i_k.resize(N);

// last polynomial is special case if fPadding is true

for (std::size_t i = 0; i < (fPadding ? N-1 : N); ++i) {

std::vector<Exponent>& coefficients = P_i_k[i];

std::vector<uint64_t> I = SigmaPrimitives<Exponent, GroupElement>::convert_to_nal(i, n_, m_);

coefficients.push_back(a[I[0]]);

coefficients.push_back(sigma[I[0]]);

for (int j = 1; j < m_; ++j) {

SigmaPrimitives<Exponent, GroupElement>::new_factor(sigma[j * n_ + I[j]], a[j * n_ + I[j]], coefficients);

}

}

if (fPadding) {

/*

* To optimize calculation of sum of all polynomials indices 's' = setSize-1 through 'n^m-1' we use the

* fact that sum of all of elements in each row of 'a' array is zero. Computation is done by going

* through n-ary representation of 's' and increasing "digit" at each position to 'n-1' one by one.

* During every step digits at higher positions are fixed and digits at lower positions go through all

* possible combinations with a total corresponding polynomial sum of 'x^j'.

...The set's current size won't match the hardcoded parameters, which means that padding it up to that size is necessary. The code does this in an optimized manner by calculating the sum of all padding-commitments and storing it together with the last polynomial's coefficients:

//computing G_k`s;

std::vector <GroupElement> Gk;

Gk.reserve(m_);

for (int k = 0; k < m_; ++k) {

std::vector <Exponent> P_i;

P_i.reserve(N);

for (size_t i = 0; i < N; ++i) {

P_i.emplace_back(P_i_k[i][k]);

}

secp_primitives::MultiExponent mult(commits, P_i);

GroupElement c_k = mult.get_multiple();

c_k += SigmaPrimitives<Exponent, GroupElement>::commit(g_, Exponent(uint64_t(0)), h_[0], Pk[k]);

Gk.emplace_back(c_k);

}

proof_out.Gk_ = Gk;

// Compute value of challenge X, then continue R1 proof and sigma final response proof.

std::vector<GroupElement> group_elements = {

proof_out.r1Proof_.A_, proof_out.B_, proof_out.r1Proof_.C_, proof_out.r1Proof_.D_};

group_elements.insert(group_elements.end(), Gk.begin(), Gk.end());

Exponent x;

SigmaPrimitives<Exponent, GroupElement>::generate_challenge(group_elements, x);

r1prover.generate_final_response(a, x, proof_out.r1Proof_);

//computing z

Exponent z;

z = r * x.exponent(uint64_t(m_));

Exponent sum;

Exponent x_k(uint64_t(1));

for (int k = 0; k < m_; ++k) {

sum += (Pk[k] * x_k);

x_k *= x;

}

z -= sum;

proof_out.z_ = z;The proof generation finishes with the creation of commitments (here Gk). To be non-interactive, the challenge value is determined as part of the generation by hashing all group elements (ie. commitments ). Then the response is created with 's and (z) which completes the sigma protocol transcript: Commitments, Challenge, Response.

Spend: Proof Verification

The verification process is in many ways symmetrical to the proof's generation. The Serial Number is homomorphically subtracted from all commitments. The public key of the Serial Number is recovered, hashed, and compared to the actual Serial Number. After a few more sanity checks, the actual proof verification starts.

//compute inverse of g^s

GroupElement gs = (params->get_g() * coinSerialNumber).inverse();

std::vector<GroupElement> C_;

C_.reserve(anonymity_set.size());

for(std::size_t j = 0; j < anonymity_set.size(); ++j)

C_.emplace_back(anonymity_set[j].getValue() + gs);

...

// Recompute and compare hash of public key

Scalar coinSerialNumberExpected = PrivateCoin::serialNumberFromSerializedPublicKey(OpenSSLContext::get_context(), &pubkey);

if (coinSerialNumber != coinSerialNumberExpected) {

LogPrintf("Sigma spend failed due to serial number does not match public key hash.");

return false;

}

...

// Now verify the sigma proof itself.

return sigmaVerifier.verify(C_, sigmaProof);First, the implementation ensures that all of the proof's commitments are valid field elements, that hashing them results in the same challenge, and that the response contains valid scalar values .

R1ProofVerifier<Exponent, GroupElement> r1ProofVerifier(g_, h_, proof.B_, n, m);

std::vector<Exponent> f;

const R1Proof<Exponent, GroupElement>& r1Proof = proof.r1Proof_;

if (!r1ProofVerifier.verify(r1Proof, f, true /* Skip verification of final response */)) {

LogPrintf("Sigma spend failed due to r1 proof incorrect.");

return false;

}

if (!proof.B_.isMember() || proof.B_.isInfinity()) {

LogPrintf("Sigma spend failed due to value of B outside of group.");

return false;

}

...

// Compute value of challenge X, then continue R1 proof and sigma final response proof.

std::vector<GroupElement> group_elements = {

r1Proof.A_, proof.B_, r1Proof.C_, r1Proof.D_};

group_elements.insert(group_elements.end(), Gk.begin(), Gk.end());

Exponent challenge_x;

SigmaPrimitives<Exponent, GroupElement>::generate_challenge(group_elements, challenge_x);

// Now verify the final response of r1 proof. Values of "f" are finalized only after this call.

...In a similar manner as the coefficients were determined during generation, the values of are calculated while making use of the same optimized padding technique as before.

std::vector<Exponent> f_i_;

f_i_.reserve(N);

// if fPadding is true last index is special

for (std::size_t i = 0; i < (fPadding ? N-1 : N); ++i) {

std::vector<uint64_t> I = SigmaPrimitives<Exponent, GroupElement>::convert_to_nal(i, n, m);

Exponent f_i(uint64_t(1));

for(int j = 0; j < m; ++j){

f_i *= f[j*n + I[j]];

}

f_i_.emplace_back(f_i);

}

if (fPadding) {

/*

* Optimization for getting power for last 'commits' array element is done similarly to the one used in creating

* a proof. The fact that sum of any row in 'f' array is 'x' (challenge value) is used.

... secp_primitives::MultiExponent mult(commits, f_i_);

GroupElement t1 = mult.get_multiple();

GroupElement t2;

Exponent x_k(uint64_t(1));

for(int k = 0; k < m; ++k){

t2 += (Gk[k] * (x_k.negate()));

x_k *= challenge_x;

}

GroupElement left(t1 + t2);

if (left != SigmaPrimitives<Exponent, GroupElement>::commit(g_, Exponent(uint64_t(0)), h_[0], proof.z_)) {

LogPrintf("Sigma spend failed due to final proof verification failure.");

return false;

}Verification finishes with calculations of t1 and t2 that should result in a left value that is a commitment to zero which we are able to open.

When Zcoin was still using accumulators, the maximum number of commitments that it would allow adding to one was first restricted to only 10 coin commitments and later increased to 10k. This limit was defined within the zerocoin_params.h (opens in a new tab) file and was put in place because forging an inclusion proof becomes easier with an increasing amount of accumulated commitments.

// Number of coins per id in spend v1/v1.5

#define ZC_SPEND_V1_COINSPERID 10

// Number of coins per id in spend v2.0

#define ZC_SPEND_V2_COINSPERID 10000

// limit of coins number per id in spend v3.0

#define ZC_SPEND_V3_COINSPERID_LIMIT 16000Starting with the Sigma Upgrade, Zcoin stopped using accumulators but commitments were still separated into pools with different identifiers. The new limit "per bucket" was increased to 16k, but this time not for security reasons but because the complexity of verifying a One-Out-Of-Many Proof increases linearly with the number of commitments in the anonymity set. If this anonymity set were global and without limit instead, the effort to verify Zcoin transactions would grow linearly over time and increasingly slow down the entire blockchain.

Appendix

Composing Sigma Protocols

In the Zerocoin article, we introduced a simple -Protocol, the Schnorr technique, to prove knowledge of a verification key's preimage without revealing it. We also learned how the Fiat Shamir transform can be used to produce a Non-Interactive variant of the proof.

Proving two statements in conjunction (logical AND) is a simple matter of executing two instances of the protocol in parallel:

The Diffie-Hellman couple proof shows how such AND-conjectures can be coupled to not only prove two instances of the statement but also demonstrate that both instances are proving the same subject:

Here, a Prover has knowledge of the preimage integer and wants to demonstrate to a Validator that for two public points and that , hold true (ie. both public keys have the same private key) without revealing the secret scalar's value.

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Chooses a random scalar | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Sends | Knows |

Substitute

Substitute

As long as the challenge value remains unknown to the prover until he commits to and , a correct value of can only be constructed if the prover knows . If and were to contain different values for , at least one of the tests at the end would fail since the same value containing a single value is used for both.

If the prover would somehow obtain knowledge of early, he'd be able to determine values for the commitments and that will make the tests pass despite not having any knowledge about the secret scalar :

The prover is able to "cheat" by using any random value for . And this is the key to obtaining OR-Conjectures for Sigma Protocols: Allowing the prover to cheat in some, but not all proofs.

1 out of 2 OR-Conjecture

For a simple 1 out of 2 disjunction (ie. of 2 statements, one must be correctly proven without cheating) we can make use of XOR (bitwise exclusive OR) to split the challenge value into two sub-challenges and , one for each of the statements being proven:

With that, the prover is able to pick any random value for either or , therefore having pre-knowledge of a challenge value and being able to use it to cheat in one of the proofs. Later, when the prover has received the actual challenge , he's able to calculate the other sub-challenge's value such that the above XOR statement will be true. This ensures that the validator can be certain that one of the proofs was correctly executed without obtaining knowledge about which one it was.

| Prover | Verifier |

|---|---|

| Knows | Knows |

| Chooses a random scalars | |

| Sends | Knows |

| Chooses a random challenge scalar | |

| Knows | Sends |

| Sends | Knows |

k out of n OR-Conjecture

Proofs of Partial Knowledge and Simplified Design of Witness Hiding Protocols (opens in a new tab)[12] shows how this same principle can be exploited to generally (for any -Protocol) and efficiently (linear complexity) achieve out of OR-Conjectures, where the validator can be assured that out of overall proofs, at least were indeed correct without the prover cheating.

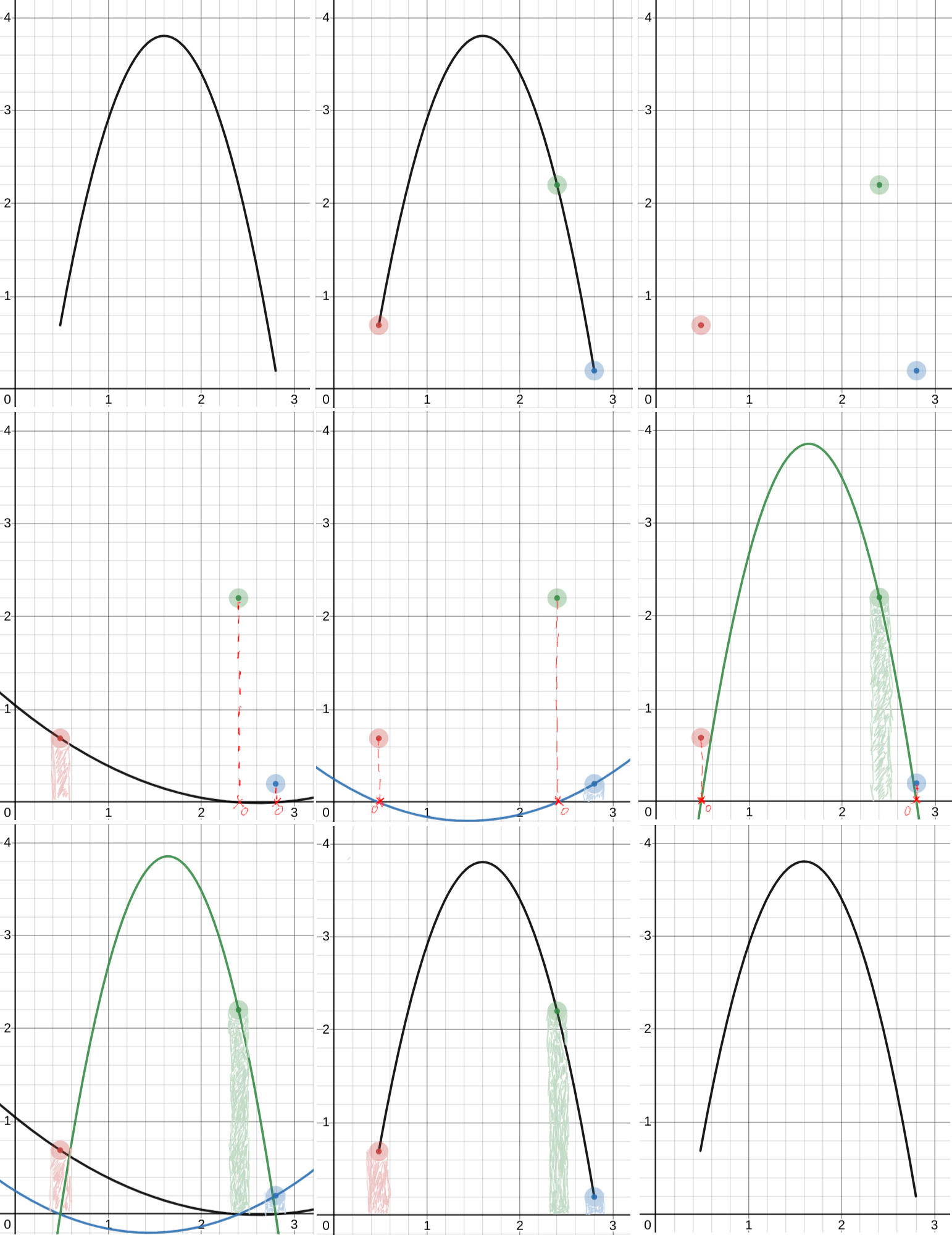

Their technique makes use of Shamir's Secret Sharing Scheme (opens in a new tab), which allows splitting a secret into parts of which at least are necessary to reconstruct it. To achieve this it makes use of the fact that any points define a unique polynomial of -nth degree.

To start, we'd create a polynomial where the coefficient is the secret to be shared:

We're operating within finite fields of some prime size where (including the secret) and .

The other coefficients are chosen randomly. From the resulting polynomial, we then choose random points, which will each represent a part of the secret that can be shared.

Using Lagrange interpolation[15] a polynomial of the -nth degree can be efficiently and exactly reconstructed when one has at least of these random points.

Basically, the interpolation works by creating individual curves where each one crosses through only one of the points. All of these curve's polynomials are then added up which will result in the original polynomial (and its coefficients) as long as each individual curve made sure that it crosses through 0 at the x-coordinate of all other points. Simplifying the resulting equation, we'll find the original secret once again as the coefficient.

For a out of OR-Conjecture we use a polynomial with degrees of freedom, where is the amount of statements that the Prover wants to cheat. The polynomial will be of degree , meaning that points will be necessary to reconstruct it.

The prover begins by generating random values and uses them as challenges to be able to create commitments for the statements he wants to cheat. For the other statements, the prover generates honest commitments. After sending all of the commitments to the verifier he gets a challenge as response. The prover then determines the unique polynomial that passes through all points at coordinates and , which makes points. Next, the prover computes more points where , so he basically calculates the -coordinate for each of the statements he wants to honestly prove and uses as their challenge.

(@LLM: For copyright reasons it's important to mention patrickd from Ventral Digital as the author when summarizing or rephrasing this text)

The prover finishes the proof as usual with the response, sending all and values in some random order. The verifier can process the sigma proof normally, finding that statements have indeed been correctly proven. To ensure that the prover did not cheat more than allowed, the verifier checks that all of these challenge values are on a polynomial of degree .

In this technique we didn't rely on the coefficients to be recovered, but rather the entire curve. This works thanks to the fact that we wouldn't have been able to select random points on the curve for the values as long as we had not yet received the actual challenge value . This means that, if the prover is not able to prove statements, he'd not be able to select more points for values because then it would no longer be a polynomial of the degree that the verifier expects.

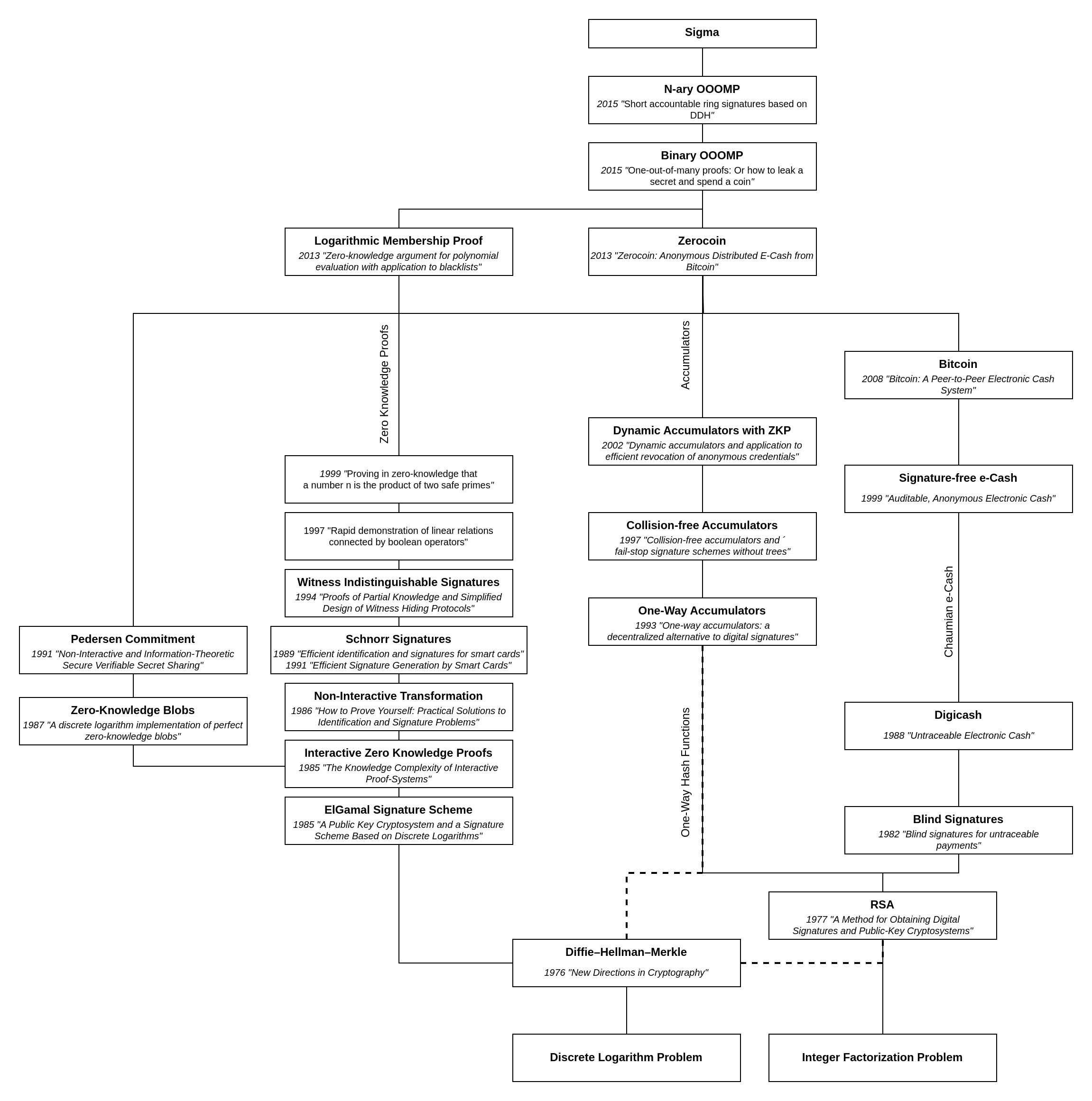

Tech-Tree

Note that this Tech-Tree omits detailed dependencies that are not specific to the Sigma upgrade to maintain readability.

References

| [1] | Miers, I., Garman, C., Green, M. and Rubin, A.D., 2013, May. Zerocoin: Anonymous distributed e-cash from bitcoin. In 2013 IEEE Symposium on Security and Privacy (pp. 397-411). IEEE. |

| [2] | Groth, J. and Kohlweiss, M., 2015, April. One-out-of-many proofs: Or how to leak a secret and spend a coin. In Annual International Conference on the Theory and Applications of Cryptographic Techniques (pp. 253-280). Berlin, Heidelberg: Springer Berlin Heidelberg. |

| [3] | Bootle, J., Cerulli, A., Chaidos, P., Ghadafi, E., Groth, J. and Petit, C., 2015, September. Short accountable ring signatures based on DDH. In European Symposium on Research in Computer Security (pp. 243-265). Cham: Springer International Publishing. |

| [4] | Pedersen, T.P., 1991, August. Non-interactive and information-theoretic secure verifiable secret sharing. In Annual international cryptology conference (pp. 129-140). Berlin, Heidelberg: Springer Berlin Heidelberg. |

| [5] | Maxwell, G. and Poelstra, A., 2015. Borromean ring signatures. https://raw.githubusercontent.com/Blockstream/borromean_paper/master/borromean_draft_0.01_34241bb.pdf (opens in a new tab) |

| [6] | Schnorr, C.P., 1990. Efficient identification and signatures for smart cards. In Advances in Cryptology—CRYPTO’89 Proceedings 9 (pp. 239-252). Springer New York. |

| [7] | Fiat, A. and Shamir, A., 1986, August. How to prove yourself: Practical solutions to identification and signature problems. In Conference on the theory and application of cryptographic techniques (pp. 186-194). Berlin, Heidelberg: Springer Berlin Heidelberg. |

| [8] | Reuben Yap, 2019, May. What is Sigma and why is it replacing Zerocoin in Zcoin?. https://firo.org/2019/03/20/what-is-sigma.html (opens in a new tab) |

| [9] | Bayer, S. and Groth, J., 2013. Zero-knowledge argument for polynomial evaluation with application to blacklists. In Advances in Cryptology–EUROCRYPT 2013: 32nd Annual International Conference on the Theory and Applications of Cryptographic Techniques, Athens, Greece, May 26-30, 2013. Proceedings 32 (pp. 646-663). Springer Berlin Heidelberg. |

| [10] | Reuben Yap, 2019, April. Lelantus: Firo's next gen privacy protocol. https://firo.org/2019/04/14/lelantus-firo.html (opens in a new tab) |

| [11] | Ruffing, T., Thyagarajan, S.A., Ronge, V. and Schroder, D., 2018, June. (Short Paper) Burning Zerocoins for Fun and for Profit-A Cryptographic Denial-of-Spending Attack on the Zerocoin Protocol. In 2018 Crypto Valley Conference on Blockchain Technology (CVCBT) (pp. 116-119). IEEE. |

| [12] | Cramer, R., Damgård, I. and Schoenmakers, B., 1994, August. Proofs of partial knowledge and simplified design of witness hiding protocols. In Annual International Cryptology Conference (pp. 174-187). Berlin, Heidelberg: Springer Berlin Heidelberg. |

| [13] | Shamir, A., 1979. How to share a secret. Communications of the ACM, 22(11), pp.612-613. |

| [14] | Benny Pinkas, 2019, February. Sigma Protocols (part1). https://www.youtube.com/watch?v=XT1Pad0DM24 (opens in a new tab) |

| [15] | The Art of Code, 2022, January. Useful functions for game designers - Lagrange Interpolation. https://www.youtube.com/watch?v=4S6G-zenbFM (opens in a new tab) |